Using ChatGPT to Write Quiz Questions

Using ChatGPT to Write Quiz Questions

The following are notes from OTL’s session for the AI in Action series.

Mark Kaysar and Agustin Ríos used AI to create quiz questions with different question types. They also prompted ChatGPT to properly format a quiz for uploading into Bruin Learn.

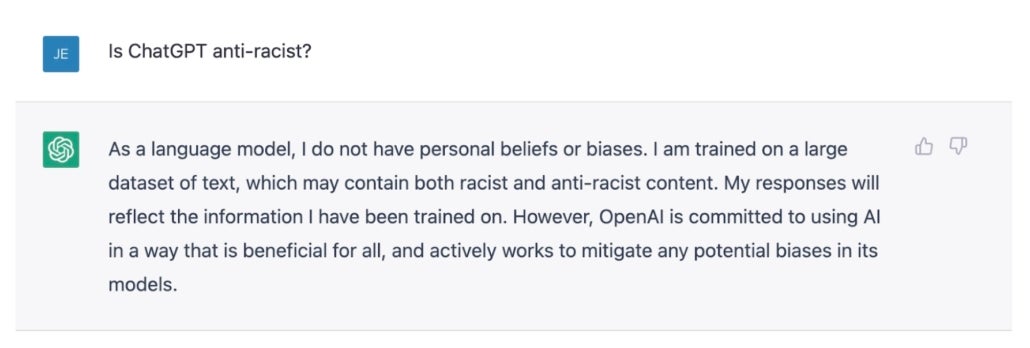

Can ChatGPT help faculty create and format low stakes assessments for using in Canvas?

Demo Question Generation

- Question Types

- Multiple Choice

- True/False

- Fill in the blank

- Short Answer

- Short Essay

- Adding feedback to answers

Quiz Formatting

When working in ChatGPT, if you include the format instructions shown below you will receive questions that are ready for upload to Canvas. ChatGPT will usually respond in the format you tell it to use, but if it does not, resubmit and ask it to reformat.

Please create all quiz questions using the following format. Each choice needs to start with a lower case alphabet,

a, b, c, d, etc. with a close parenthesis. The correct choice is designated with an asterisk.

1. What is 2+3?

a) 6

b) 1

*c) 5

d) 10

Sample ChatGPT Prompts

Provide a topic

Can you write some multiple choice questions about [Insert topic]?

Provide a link to a reading

Please write multiple choice questions to evaluate this content. [paste content] 1.2 The Weakness of Early Patent Systems - Introduction to Intellectual Property | OpenStax

Provide a link to a Canvas page

Please write multiple choice questions to evaluate this content. Can you provide feedback on why the incorrect items are incorrect? [paste content] https://canvas.ucdavis.edu/courses/34528/pages/being-present-in-your-online-course

Provide a desired outcome

Please write multiple choice questions that evaluate this outcome [paste outcome] Analyze how the costume designer’s interpretation of the screenplay is affected by the tone of the politics of the era and the pressures from the studio

Provide a video script or video captions

Please write multiple choice questions to evaluate this content. [paste content]

Provide a set of answers

Please write multiple choice questions that have the following as answers. [paste answers]

Provide similar questions to use as a model

Please write multiple choice questions that evaluate the same content as this question. [paste question]

Uploading to Canvas

Before you can upload the quiz into Canvas, you need to save the questions in the QTI file type.

Step one

- Paste your questions into the Exam Converter site.

- Download the resulting zip file.

Step two

- Import into your course site.

- How do I import quizzes from QTI packages? – Instructure Community

This will create a quiz with your new questions.