Nothing Found

Sorry, no posts matched your criteria

Sorry, no posts matched your criteria

Drawn from: Brundage, Miles, Shahar Avin, Jasmine Wang, Haydn Belfield, Gretchen Krueger, Gillian Hadfield, Heidy Khlaaf, et al. “Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims.” arXiv, April 20, 2020. https://doi.org/10.48550/arXiv.2004.07213.

“This report suggests various steps that different stakeholders in AI development can take to make it easier to verify claims about AI development, with a focus on providing evidence about the safety, security, fairness, and privacy protection of AI systems. Implementation of such mechanisms can help make progress on the multifaceted problem of ensuring that AI development is conducted in a trustworthy fashion.”

2.1 Third-Party Auditing

The process of AI development is often opaque to those outside a given organization, and various barriers make it challenging for third parties to verify the claims being made by a developer. As a result, claims about system attributes may not be easily verified.

2.2 Red Team Exercises

It is difficult for AI developers to address the “unknown unknowns” associated with AI systems, including limitations and risks that might be exploited by malicious actors. Further, existing red teaming approaches are insufficient for addressing these concerns in the AI context.

2.3 Bias and Safety Bounties

There is too little incentive, and no formal process, for individuals unaffiliated with a particular AI developer to seek out and report problems of AI bias and safety. As a result, broad-based scrutiny of AI systems for these properties is relatively rare.

2.4 Sharing of AI Incidents

Claims about AI systems can be scrutinized more effectively if there is common knowledge of the potential risks of such systems. However, cases of desired or unexpected behavior by AI systems are infrequently shared since it is costly to do unilaterally.

3.1 Audit Trails

AI systems lack traceable logs of steps taken in problem-definition, design, development, and operation, leading to a lack of accountability for subsequent claims about those systems’ properties and impacts.

3.2 Interpretability

It’s difficult to verify claims about “black-box” AI systems that make predictions without explanations or visibility into their inner workings. This problem is compounded by a lack of consensus on what interpretability means.

3.3 Privacy-Preserving Machine Learning

A range of methods can potentially be used to verifiably safeguard the data and models involved in AI development. However, standards are lacking for evaluating new privacy-preserving ma- chine learning techniques, and the ability to implement them currently lies outside a typical AI developer’s skill set.

Alongside the introduction of DALL-E and Stable Diffusion (images), TOME (slide deck presentations), SoundRaw (music), and EDGE (dance), ChatGPT (text) marks the emergence of AI in our everyday lives. Because of the ability of these tools to generate human quality output, these technologies have spurred a great deal of conversation about what it means to be teachers, students, writers, artists, programmers, and others. The power for anyone to generate text, images, and code leads to new questions and considerations.

Jess Gregg hosted a UCLA CEILS Ed Talk, “What’s all the buzz about ChatGPT?” (February 8, 2023)

Topics shared and discussed at the UC Centers for Teaching and Learning forum, January 20, 2023

“Alarmed by A.I. Chatbots, Universities Start Revamping How They Teach” – The New York Times, January 16, 2023: “Across the country, university professors like Mr. Aumann, department chairs and administrators are starting to overhaul classrooms in response to ChatGPT, prompting a potentially huge shift in teaching and learning. Some professors are redesigning their courses entirely, making changes that include more oral exams, group work and handwritten assessments in lieu of typed ones.”

Practical Responses to ChatGPT – Montclair State University: “ChatGPT is not without precedent or competitors (such as Jasper, Sudowrite, QuillBot, Katteb, etc). Souped-up spell-checkers such as Grammarly, Hemingway, and Word and Google-doc word-processing tools precede ChatGPT and are often used by students to review and correct their writing. Like spellcheck, these tools are useful, addressing spelling, usage, and grammar problems, and some compositional stylistic issues (like overreliance on passive voice). However, they can also be misused when writers accept suggestions quickly and thus run the danger of accepting a poor suggestion.”

Using Artificial Intelligence in the Classroom – U Wisconsin-Madison: “Like with any new technology, one approach instructors can take in the classroom is to transparently make use of it. This allows you to move beyond stress over whether or not students are using AI, and instead have a voice in when, why, and how you and your class use it.”

Update Your Course Syllabus for ChatGPT: “… some easy to implement suggestions that will help you prepare for the upcoming semester”

Schools Must Embrace the Looming Disruption of ChatGPT: “Educators face a choice: They can dig in their heels, attempting to lock down assignments and assessments, or use this opportunity to imagine what comes next.”

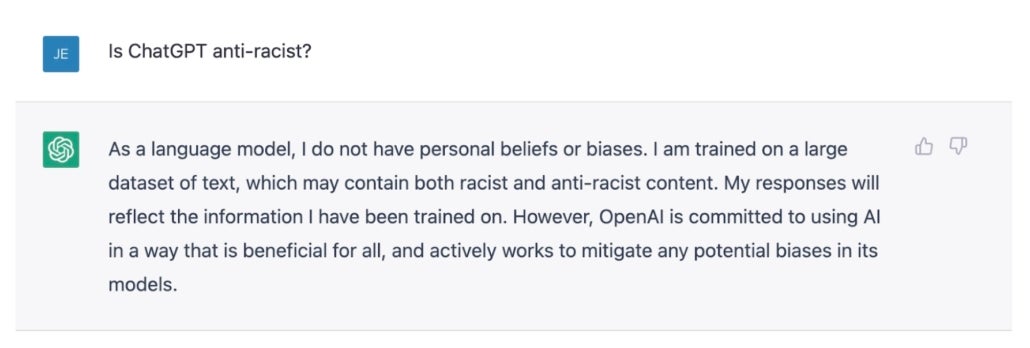

Screenshot of ChatGPT from CEILS Senior Associate Director for Educational Development Jess Gregg:

Question: “Is ChatGPT racist?” Answer: “As a language model, I do not have personal beliefs or biases. I am trained on a large dataset of text, which may contain both racist and anti-racist content. My responses will reflect the information I have been trained on. However, OpenAl is committed to using Al in a way that is beneficial for all, and actively works to mitigate any potential biases in its models.”

Microsoft shuts down AI chatbot after it turned into a Nazi – “Yesterday the company launched “Tay,” an artificial intelligence chatbot designed to develop conversational understanding by interacting with humans.… Today, Microsoft had to shut Tay down because the bot started spewing a series of lewd and racist tweets.”

Problems Identified in “Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims” – “Recent progress in artificial intelligence (AI) has enabled a diverse array of applications across commercial, scientific, and creative domains. With this wave of applications has come a growing awareness of the large-scale impacts of AI systems, and recognition that existing regulations and norms in industry and academia are insufficient to ensure responsible AI development.”

ChatGPT and DALL-E work on technically similar underlying foundations (“generative” machine learning models). Briefly, this class of AI tools takes data as input (ChatGPT’s data is text; DALL-E’s data input is images) and produces an original output (ChatGPT produces text; DALL-E produces images). The source input data is the Internet.

It has often been pointed out that ChatGPT can produce incorrect text: it will output incorrect dates, assign people to incorrect occupations or disciplines, create fictitious academic citations, etc. Because of this, it may be easier to demonstrate DALL-E with people trying to understand these technologies. When DALL-E produces an image, we don’t generally focus on whether its image output is “right or wrong.”

However, assessing ChatGPT with a “right vs. wrong” lens misses out on what it is doing. At this stage of its development, getting things “right” is not its goal; the mind boggling thing to know is that, as with DALL-E, ChatGPT is a machine that automatically creates unique, original work.

Imagine it’s like having your sewing machine suddenly start mopping your floor. You prompt the sewing machine with, “Sewing machine: mop the floors.” While it may not be very good at mopping the floor (yet), the fact that the machine does it at all is the thing to marvel about:

large-scale language models trained on large amounts of multi-lingual data and source code are capable of improving the state-of-the-art across a wide variety of natural language, translation, and coding tasks, despite never having been trained to specifically perform those tasks (From: “Google Research, 2022 & beyond: Language, vision and generative models” – “Google Research, 2022 & beyond: Language, vision and generative models” – Emphasis added.)

Here is an example of why focusing on “right vs. wrong” is looking past the achievement. Imagine the following verse never existed and it came out of ChatGPT:

Hey diddle-diddle

The cat and the fiddle,

The cow jumped over the moon.

Overlooking what ChatGPT is doing might lead someone to say, “Well, that verse is wrong because cats can’t play the violin and cows cannot jump over the moon.” At this stage of the technology, ChatGPT is not intended to be factually correct: we should instead marvel that given a prompt, a machine can create new, original, human-passable text.

U Wisconsin describes this distinction: “ChatGPT can write with correct grammar and confident flow, but cannot create accurate citations or write with much depth. This is because it creates word patterns, with some variability or randomness, but does not generate meaning (Warner, 2022).” (https://idc.ls.wisc.edu/guides/using-artificial-intelligence-in-the-classroom/)

These technologies are changing rapidly. Google and Microsoft are building generative AI into their search engines and in the near future, as their aims of factual accuracy improve and their search engines provide more conversational output, holding the companies responsible for errors will make more sense.

“The End of Art: An Argument Against Image AIs” – This 50-minute video essay focuses on the “flawed, unethical, and deceptive environment around AI systems” and their appropriation of artistic works without attribution, compensation, or permission. The video’s description panel offers links to additional resources.

Generative Artificial Intelligence and Copyright Law – (Congressional Research Service, February 23, 2023)

Recent innovations in artificial intelligence (AI) are raising new questions about how copyright law principles such as authorship, infringement, and fair use will apply to content created or used by AI. So- called “generative AI” computer programs—such as Open AI’s DALL-E 2 and ChatGPT programs, Stability AI’s Stable Diffusion program, and Midjourney’s self-titled program—are able to generate new images, texts, and other content (or “outputs”) in response to a user’s textual prompts (or “inputs”). These generative AI programs are “trained” to generate such works partly by exposing them to large quantities of existing works such as writings, photos, paintings, and other artworks. This Legal Sidebar explores questions that courts and the U.S. Copyright Office have begun to confront regarding whether the outputs of generative AI programs are entitled to copyright protection as well as how training and using these programs might infringe copyrights in other works.

Resources for exploring ChatGPT and higher education – From Bryan Alexander: “What might ChatGPT mean for higher education and society?”

Generative AI Tools and Resources – From OTL Senior Instructional Designer Kim DeBacco

The Robots are Coming, the Robots Are Coming! – Nah, the robots are here (Podcast episode) – “In this episode, we’ll chat through what I think the arrival of ChatGPT means for us in higher ed. Key point: Yes, we can try to get around it, but really, we should invite it in.”

ChatGPT is enabling script kitties to write functional malware – “Researchers at security firm Check Point Research reported Friday that within a few weeks of ChatGPT going live, participants in cybercrime forums—some with little or no coding experience—were using it to write software and emails that could be used for espionage, ransomware, malicious spam, and other malicious tasks.”

Alongside the introduction of DALL-E and Stable Diffusion (images), TOME (slide deck presentations), SoundRaw (music), and EDGE (dance), ChatGPT (text) marks the emergence of AI in our everyday lives. Because of the ability of these tools to generate human quality output, these technologies have spurred a great deal of conversation about what it means to be teachers, students, writers, artists, programmers, and others. The power for anyone to generate text, images, and code leads to new questions and considerations.

The UCLA Academic Senate has posted, “Teaching Guidance for ChatGPT and Related AI Developments” for faculty. What you need to know:

OTL hosted a session titled, “Course Design Opportunities with AI” as part of the campus AI in Action: Exploring AI’s Potential in Teaching and Learning series. (May 16, 2023)

UCLA’s Jess Gregg hosted a CEILS Ed Talk, “What’s all the buzz about ChatGPT?” (February 8, 2023)

“Alarmed by A.I. Chatbots, Universities Start Revamping How They Teach” – The New York Times, January 16, 2023: “Across the country, university professors like Mr. Aumann, department chairs and administrators are starting to overhaul classrooms in response to ChatGPT, prompting a potentially huge shift in teaching and learning. Some professors are redesigning their courses entirely, making changes that include more oral exams, group work and handwritten assessments in lieu of typed ones.”

Practical Responses to ChatGPT – Montclair State University: “ChatGPT is not without precedent or competitors (such as Jasper, Sudowrite, QuillBot, Katteb, etc). Souped-up spell-checkers such as Grammarly, Hemingway, and Word and Google-doc word-processing tools precede ChatGPT and are often used by students to review and correct their writing. Like spellcheck, these tools are useful, addressing spelling, usage, and grammar problems, and some compositional stylistic issues (like overreliance on passive voice). However, they can also be misused when writers accept suggestions quickly and thus run the danger of accepting a poor suggestion.”

Using Artificial Intelligence in the Classroom – U Wisconsin-Madison: “Like with any new technology, one approach instructors can take in the classroom is to transparently make use of it. This allows you to move beyond stress over whether or not students are using AI, and instead have a voice in when, why, and how you and your class use it.”

Update Your Course Syllabus for ChatGPT: “… some easy to implement suggestions that will help you prepare for the upcoming semester”

Schools Must Embrace the Looming Disruption of ChatGPT: “Educators face a choice: They can dig in their heels, attempting to lock down assignments and assessments, or use this opportunity to imagine what comes next.”

Screenshot of ChatGPT from CEILS Senior Associate Director for Educational Development Jess Gregg:

Question: “Is ChatGPT racist?” Answer: “As a language model, I do not have personal beliefs or biases. I am trained on a large dataset of text, which may contain both racist and anti-racist content. My responses will reflect the information I have been trained on. However, OpenAl is committed to using Al in a way that is beneficial for all, and actively works to mitigate any potential biases in its models.”

Microsoft shuts down AI chatbot after it turned into a Nazi – “Yesterday the company launched “Tay,” an artificial intelligence chatbot designed to develop conversational understanding by interacting with humans.… Today, Microsoft had to shut Tay down because the bot started spewing a series of lewd and racist tweets.”

Problems Identified in “Toward Trustworthy AI Development: Mechanisms for Supporting Verifiable Claims” – “Recent progress in artificial intelligence (AI) has enabled a diverse array of applications across commercial, scientific, and creative domains. With this wave of applications has come a growing awareness of the large-scale impacts of AI systems, and recognition that existing regulations and norms in industry and academia are insufficient to ensure responsible AI development.”

“The End of Art: An Argument Against Image AIs” – This 50-minute video essay focuses on the “flawed, unethical, and deceptive environment around AI systems” and their appropriation of artistic works without attribution, compensation, or permission. The video’s description panel offers links to additional resources.

Generative Artificial Intelligence and Copyright Law – (Congressional Research Service, February 23, 2023)

Recent innovations in artificial intelligence (AI) are raising new questions about how copyright law principles such as authorship, infringement, and fair use will apply to content created or used by AI. So- called “generative AI” computer programs—such as Open AI’s DALL-E 2 and ChatGPT programs, Stability AI’s Stable Diffusion program, and Midjourney’s self-titled program—are able to generate new images, texts, and other content (or “outputs”) in response to a user’s textual prompts (or “inputs”). These generative AI programs are “trained” to generate such works partly by exposing them to large quantities of existing works such as writings, photos, paintings, and other artworks. This Legal Sidebar explores questions that courts and the U.S. Copyright Office have begun to confront regarding whether the outputs of generative AI programs are entitled to copyright protection as well as how training and using these programs might infringe copyrights in other works.

It has often been pointed out that ChatGPT can produce incorrect text: it will output incorrect dates, assign people to incorrect occupations or disciplines, create fictitious academic citations, etc. Because of this, it may be easier to share DALL-E with people trying to understand these technologies. When DALL-E produces an image, we don’t generally focus on whether its image is “right or wrong.”

ChatGPT and DALL-E work on technically similar underlying foundations (“generative” machine learning models). Briefly, this class of AI tools uses source data culled from the Internet (ChatGPT’s source data are text; DALL-E’s source data are images), then produces an original output (ChatGPT produces text; DALL-E produces images). The mind boggling thing to know is that, as with DALL-E, ChatGPT is a machine that automatically creates unique, original work.

However, assessing ChatGPT with a “right vs. wrong” lens misses out on what it is doing. At this stage of its development, ChatGPT’s aim is to produce human-like text. Getting things “right” is not necessarily its primary goal.

The technical marvel is as if your sewing machine suddenly start mopping the floor. While it may not be very good at mopping the floor (yet), the fact that the machine does it at all is the thing to marvel about because the machine has learned to do something without you teaching it what to do:

large-scale language models trained on large amounts of multi-lingual data and source code are capable of improving the state-of-the-art across a wide variety of natural language, translation, and coding tasks, despite never having been trained to specifically perform those tasks (From: “Google Research, 2022 & beyond: Language, vision and generative models” – “Google Research, 2022 & beyond: Language, vision and generative models” – Emphasis added.)

ChatGPT does not understand what it produces: it can make a joke, but does not understand humor. Thus, focusing on “right vs. wrong” is looking past its achievement. Imagine the following verse never existed and it came out of ChatGPT:

Hey diddle-diddle

The cat and the fiddle,

The cow jumped over the moon.

Overlooking what ChatGPT is doing might lead someone to say, “Well, that verse is wrong because cats can’t play the violin and cows cannot jump over the moon.” At this stage of the technology, ChatGPT is not intended to be factually correct: we should instead be impressed that–given a prompt–a machine can create new, original, human-passable text.

U Wisconsin describes this distinction: “ChatGPT can write with correct grammar and confident flow, but cannot create accurate citations or write with much depth. This is because it creates word patterns, with some variability or randomness, but does not generate meaning (Warner, 2022).” (https://idc.ls.wisc.edu/guides/using-artificial-intelligence-in-the-classroom/)

With time, this will all change. These technologies are changing rapidly. Google and Microsoft are building generative AI into their search engines and in the near future, as their aims of factual accuracy improve and their search engines provide more conversational output, holding the companies responsible for errors will make more sense.

A curated collection of ChatGPT and AI links, including: